not much happened today

AI News Recap: December 4-5, 2025

A quiet end to NeurIPS with AI news from December 4-5, 2025. This recap covers updates from 12 subreddits, 544 Twitters, and 24 Discords, saving an estimated 681 minutes of reading time.AI Twitter Recap

Reasoning/Coding Models and Inference Infrastructure

- vLLM 0.12.0: Introduced DeepSeek support with a "thinking" mode, tokenizer/tool-call parsers, and correct chat_template usage. Version 0.12.0 includes experimental GPU Model Runner V2, Prefill Context Parallel groundwork for long contexts, EAGLE speculative decoding improvements, and NVFP4/W4A8/AWQ quantization. The baseline is PyTorch 2.9.0 + CUDA 12.9.

- NVIDIA CUDA Tile: NVIDIA introduced CUDA Tile IR and cuTile Python for higher-level GPU programming, shifting from thread-level SIMT to tile-based kernels optimized for Tensor Cores/TMAs. Current tooling targets Blackwell-class GPUs.

- Transformers v5 RC: Hugging Face released AutoModelForMultimodalLM and an any-to-any pipeline for multimodal inputs/outputs (e.g., Gemma3n all-modalities-to-text, Qwen3-Omni text+audio).

Agent Platform Updates

- LangChain: Added content-moderation middleware for agents and cost tracking for custom tools/API calls. Their DeepAgents CLI achieved ~42.7% on Terminal Bench 2.0.

- Together AI & Meta: Launching production-grade RL on TorchForge to support long-horizon agent workflows.

- SonarSource: Released a SonarQube MCP server for enterprise-grade static analysis integrated with AI codegen tools.

- Kimi CLI: Integrates with JetBrains IDEs via ACP. Cline added

gpt-5.1-codex-max. - Quantization: Models can be compiled with

quanto(memory monitoring recommended for Qwen3-VL).

Ecosystem Economics

- OpenRouter: Reasoning models now exceed 50% of OpenRouter usage. Chinese-trained closed models drive significant traffic, while open-weights token use has plateaued. The market is bifurcating between premium models for coding and cheaper/open models for roleplay/creative tasks.

Multimedia Models

- Kling Video 2.6: Features native, in-sync audio. Kling O1 adds persistent subject memory and consistency.

- Runway Gen-4.5: Offers fine-grained aesthetic control. Concurrent research includes Light-X (4D video rendering) and BulletTime (decoupled time/camera control).

- Qwen3-TTS: Launched with 49+ voices, 10 languages, and natural prosody.

- Gemini 3 Pro: Highlights "derendering" complex docs, screen understanding, spatial trajectory generation, and high-FPS video analysis.

- Live Preference Signals: Opus 4.5 Online models lead the Yupp Live Leaderboard. BytePlus Seedream 4.5 is climbing, and Moondream demoed aerial segmentation.

Evals, Leaderboards, and Agent Operations

- Arena and ARC: LM Arena introduced "Arena Expert" for difficult prompts. Skepticism over leaderboard placements prompts calls for eval rigor. The ARC Prize 2025 Grand Prize remains unclaimed.

- Agents in Production (MAP): Study finds productivity gains but reliability is a blocker; human oversight is common.

- RL Robustness: Dr. GRPO collapses off-policy, while other approaches converge. Practitioner notes emphasize environment/tool reliability and avoiding reward hacking.

- Prompt Evolution: GEPA rapidly rewrites prompts to double extraction accuracy.

Open Models, Datasets, and Tooling

- Open Weights Imaging: FLUX.2 leads the Artificial Analysis Image Arena for open-weights T2I and editing. LongCat-Image and LongCat-Image-Edit released.

- Datasets and Methods: MixtureVitae offers a permissive pretraining dataset. Intel’s SignRoundV2 reports progress in low-bit PTQ.

- Authoring/Research Agents: PaperDebugger is an Overleaf plugin. PosterCopilot adds layer-wise editing. Agentic Context Engineering released an official implementation.

- Notable OSS: VLQM-1.5B-Coder (English→Manim animation code) fine-tuned on MLX. AnswerDotAI’s clipmd Chrome extension copies DOM to Markdown.

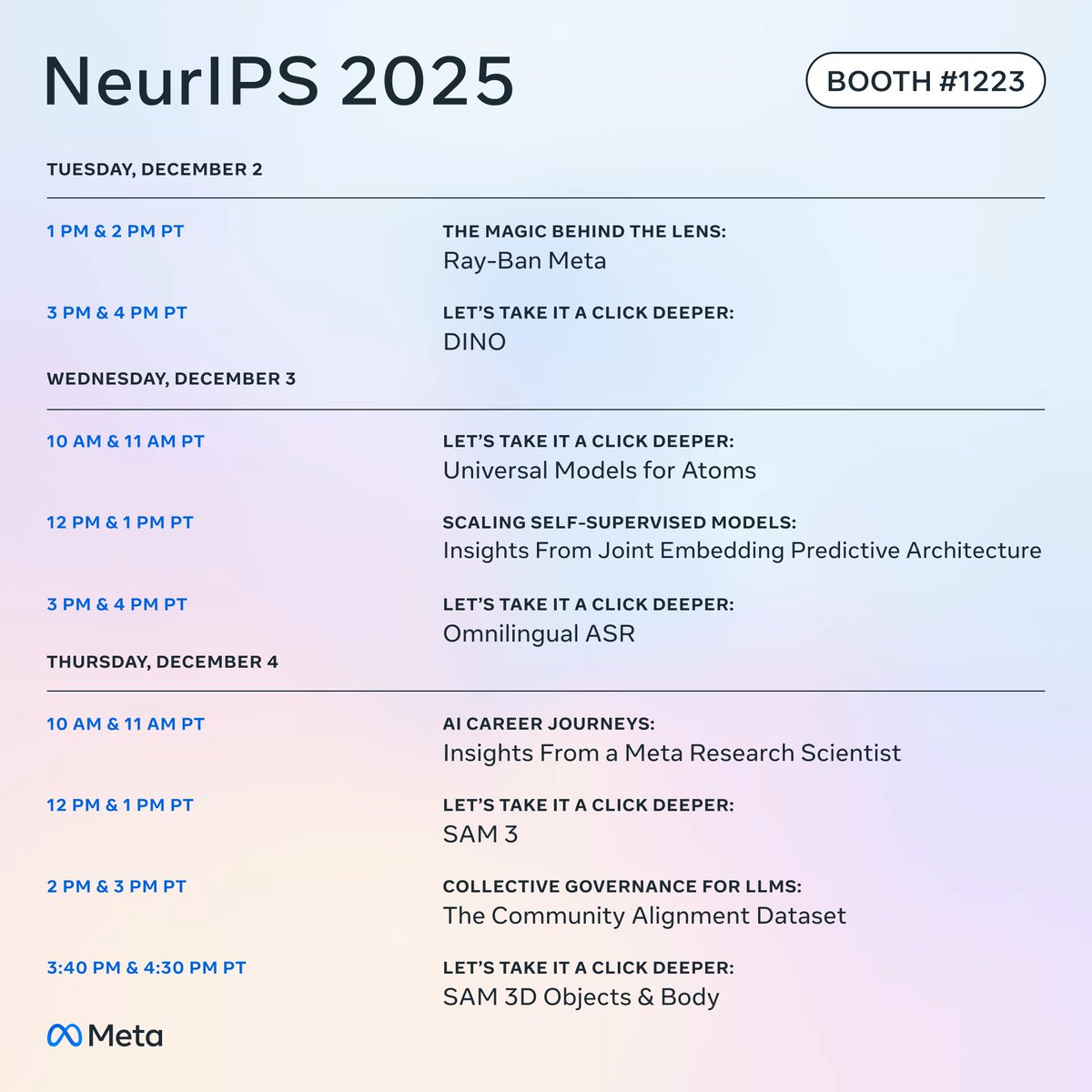

NeurIPS and Community Highlights

- Reasoning and Alignment: Yejin Choi’s keynote mentioned EPO. Sakana AI’s “Continuous Thought Machine” uses test-time compute scaling via continuous dynamics.

- Jobs and Programs: OpenAI Residency applications are open. Google’s Gemini 3 Vibe Coding hackathon offers $500k in API credits. Arena, Sakana AI, and LlamaIndex are hiring.

Top Tweets (by engagement)

- Google’s Gemini 3 Vibe Coding hackathon ($500k prizes).

- Amanda Askell AMA on AI morality, identity, consciousness.

- Qwen3-TTS: 49+ voices, 10 languages, realtime/offline APIs.

- "The boundary between you prompting the model and the model prompting you is going to get blurry in 2026."

- OpenAI Residency applications open.

- Cloudflare outage impacting tooling.

AI Reddit Recap

/r/LocalLlama + /r/localLLM Recap

- AI in Sports Analytics: Basketball AI system using RF-DETR, SAM2, and SmolVLM2 for detection, tracking, and recognition. Potential for soccer applications.

- Hardware as a Service: Discussion on the shift towards cloud-based computing resources (RAM, storage) due to data center RAM profitability over consumer RAM. Implications for personal computing.

Less Technical AI Subreddit Recap

- AI Usage in Workplaces: Anthropic study: 86% of workers believe AI enhances productivity, but 69% hide its use due to stigma or fear of job displacement.

- Image Generation and Animation Tools: SteadyDancer shows superior image consistency in video outputs compared to Wan2.2 Animate. Discussions on AI animation realism and environmental interaction.

- Humorous and Creative Illustrations: Memes illustrating digital infrastructure complexity. "Alphabet of Internal Organs" chart for anatomy education. Discussion on AI image generation limitations in anatomical accuracy.

AI Discord Recap

Next-Gen GPU Software: CUDA 13.1, cuTile, and Verified Sparse Attention

- NVIDIA cuTile: Released cuTile library for Python-based GPU programming, targeting TileIR. Bundled with CUDA 13.1. Lacks mxfp/nvfp and fp4 support currently.

- Sparse Attention: Despite extensive research, sparse attention is rarely used in production systems like vLLM. "VATTENTION" paper proposes a practical sparse attention scheme with approximation guarantees.

- RL-Tuned CUDA Kernels: CUDA-L2, an RL-tuned kernel library, reportedly outperforms cuBLAS matmul performance.

LLM Benchmarks, Usage Telemetry, and Emerging Model Contenders

- OpenRouter & a16z State of AI Report: Analyzed 100 trillion tokens, showing over 50% of OpenRouter usage is for roleplay, not programming. CODEX MAX underperforms OPUS 4.5 on coding tasks.

- Gemini 3 Performance: On SWE-Bench/OpenHands, Gemini 3 is more expensive and slower than Claude Opus 4.5, with lower scores. GPT-5.1-High caught a bug missed by Opus 4.5, while Gemini 3 missed all bugs.

- Qwen Models: Qwen 1.5-110B-Chat matches larger MoE models on two 80GB GPUs. Free Qwen3-4B endpoint suffers from throttling and downtime.

Tool-Oriented and Cost-Aware Agent Architectures

- Universal Programmatic Tool Calling: Model-agnostic orchestrator slashes tokens by 97-99% by emitting Rhai scripts for tool orchestration.

- MCP Token Accounting: Engineers are wrestling with measuring MCP token usage, noting tokenization depends on the model family.

tiktokenfor OpenAI, Anthropic’scount_tokensAPI for Claude. - DSPy and Claude Agents: Discussions on extending DSPy to Claude agents and the real-world performance of the GRPO algorithm for multi-turn conversations.

Hardware Shifts: From TinyCorp GPU Bricks to Legacy NVIDIA Obsolescence

- TinyCorp 1U Server: Teaser of a dense 1U server with 8 water-cooled GPUs.

- NVIDIA Driver Support: Latest drivers end support for Maxwell, Pascal, and Volta cards. Prototyping on AMD’s Strix Halo laptop.

- Apple Silicon Throughput: Qwen4B benchmarks show strong performance on M4 Max, M2 iPad, and iPhone 15 Pro Max, highlighting on-device inference.

Training, Quantization, and Small-Model Alternatives

- Small-LM Training: EleutherAI building training pipelines for LMs under 16GB VRAM. Debate on Hugging Face’s

smol-training-playbookfor from-scratch pretraining on limited RAM. - Quantization: 4Bit-Forge aims to democratize 4-bit quantization. Toolchain fragmentation remains a challenge.

- Non-LLM Architectures: HRM/TRM models (~27M parameters) reportedly outperform LLMs on benchmarks, challenging the scale race and LLMs' environmental impact.

Discord Channel Summaries

- BASI Jailbreaking: Discussions on jailbreaking Gemini 3 Pro, DeepSeek reverse shells, YouTube ad blockers, and AI's role in zombie survival scenarios.

- LMArena: Debates on AI art's artistic form (NSFW content), generating realistic images, bypassing Sora's filters, Cloudflare outage impact, and Gemini 3 Pro's intended use.

- Perplexity AI: Cloudflare outage disruptions, user gripes about Pro limits, Gemini Deep Research compared unfavorably to GPT-5.1 and Claude, and rumors of Cristiano Ronaldo collaboration.

- Cursor Community: Joking about Sequoia OS performance issues, RAM usage comparisons, Cursor composer degradation, GPT-5 Codex Max vs Opus 4.5 debate, and approval process problems.

- Unsloth AI: MacOS Docker bug, dissatisfaction with Gemini 3 Pro, Nvidia's undervalued open-source contributions, NYC hackathons, and HuggingFace download speed fixes.

- LM Studio: AI finetuning AI with Gemini 3.0, Qwen 3 coder creating Tetris AI, Alter integrating local AI on MacOS, and M4 Max outperforming 4090 on Qwen4b.

- OpenAI: Gemini 3 underperformance on SWE-Bench, Google's spending on Gemini 3, GPT-5.1 catching bugs missed by Gemini 3, ChatGPT's perceived political leaning, and Gemini 3's coding capabilities.

- OpenRouter: State of AI report with a16z, FLUX.2 discussion, CODEX MAX underperforming OPUS, roleplay surpassing programming, and Qwen 4B uptime issues.

- Eleuther: Small LM training under 16GB VRAM, debate on HF's training playbook, Google's Titan's Miras for long-term memory, and Brain-Backprop talk.

- GPU MODE: NVIDIA cuTile library release, CUDA programming guide rewrite, sparse attention elusiveness, 4Bit-Forge for quantization, and Strix Halo laptop prototyping.

- Latent Space: Claude generating SQL injection vulnerabilities, Tanstack AI toolkit, Qwen 1.5-110B performance, TinyCorp GPU server tease, and Meta's acquisition of Limitless.

- Modular (Mojo 🔥): Gemini 3's Mojo understanding, Mojo stdlib proposal, Colab T4 GPUs for prototyping, and Mojo open source release plans.

- Yannick Kilcher: Linear control limitations, AI competition catastrophe concerns, robustness vs. performance trade-offs in control, unknown dynamics in robotics, and Bezos's new AI company.

- HuggingFace: DeepSeek v3.2 implementation stalls, HF Space CPU quota issues, seeking small LLM for Roblox, model-agnostic tool orchestrator debut, and HRM/TRM models challenging LLM giants.

- Moonshot AI (Kimi K-2): Kimi for Coding access issues, cloud code and roo code support, desire for community-driven LM tinkerers, and 4x K2 turbo limit.

- MCP Contributors: Seeking MCP token usage analysis tools, tokenization tied to models,

tiktokenfor GPT, and Anthropic's limited tokenizer access. - aider: Ollama timeout errors, Claude Sonnet 4.5 performance dip, workflow automation engineer introduction, and RAG expertise.

- DSPy: DSPy support for Claude agents, GRPO algorithm exploration, and multi-turn conversations.

- tinygrad: FSDP bounty troubles, USBGPU on Raspberry Pi, and

struct.unpackGPU implementation. - Manus.im Discord: Workflow automation, LLM integration, RAG pipelines, AI content detection, and image AI pipeline services offered.